ReLU Activation Function Variants

The ReLU activation function suffers from a problem known as the dying ReLUs: during training, some neurons effectively die, meaning they stop outputting anything other than 0. A neuron dies when its weights get tweaked in such a way that the weighted sum of its inputs are negative for all instances in the training set. When this happens, it just keeps outputting zeros, and Gradient Descent does not affect it anymore because the gradient of the ReLU function is zero when its input is negative. This blog introduces some important variants of ReLU Activation Function.

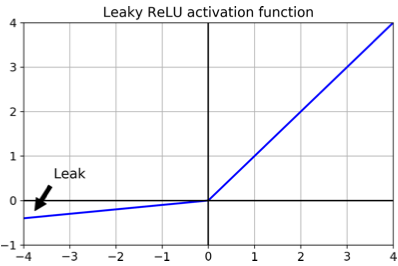

Leaky ReLU function is defined as LeakyReLUα(z) = max(αz, z).

The randomized leaky ReLU (RReLU), where α is picked randomly in a given range during training and is fixed to an average value during testing. RReLU also performed fairly well and seemed to act as a regularizer reducing the risk of overfitting the training set.

The parametric leaky ReLU (PReLU), where α is authorized to be learned during training. PReLU was reported to strongly outperform ReLU on large image datasets, but on smaller datasets it runs the risk of overfitting the training set.

ELU (exponential linear unit) function is defined as

Scaled ELU (SELU) activation function is a scaled variant of the ELU activation function. If we build a neural network composed exclusively of a stack of dense layers, and if all hidden layers use the SELU activation function, then the network will self- normalize: the output of each layer will tend to preserve a mean of 0 and standard deviation of 1 during training, which solves the vanishing/exploding gradients problem. As a result, the SELU activation function often significantly outperforms other activation functions for such neural nets.

In general SELU > ELU > leaky ReLU (and its variants) > ReLU > tanh > logistic. If the network’s architecture prevents it from self-normalizing, then ELU may perform better than SELU. If we care about runtime latency, then leaky ReLU is preferred. If we have spare time and computing power,we can use cross-validation to evaluate other activation functions. If the network is overfitting, then RReLU. If we have a huge training set, then PReLU. ReLU is the most used activation function, many libraries and hardware accelerators provide ReLU-specific optimizations. Therefore, if speed is the priority, ReLU might still be the best choice.